Since its debut in 2016, I've frequently relied on Google Assistant to make calls, set reminders, add calendar events, set alarms, control smart home devices, and more. Its personalized, conversational approach changed how I interact with my phone.

Over the past few months, Google has steadily shifted focus to its new Gemini AI assistant, first unveiled at Google I/O 2023. Gemini began rolling out to Android devices in 2024 as an optional alternative to Google Assistant, giving users a choice between the two.

As of June, it has been force-fed across the entirety of the Google ecosystem — from Gmail and Docs to Photos and Android, in general — it's everywhere.

Initially, I was hesitant about switching to Gemini AI, as it lacked many of the core features I'd grown accustomed to with Google Assistant. Of course, Google's history of abruptly dumping projects further fueled my hesitation.

After observing Gemini's progress, however, and the promising announcements from Google I/O 2025, I'm excited about its future. We will talk about why I feel Gemini AI is a notch above Google Assistant, but first, let's talk about how it changed the way I use my Android smartphone.

How it made a difference in my life

The new Gemini AI 2.5 model is packed with a fleet of improved features from its predecessor. So much that I've made the long leap, and I'm loving it.

But why only now? Well, the answer is simple: it finally has all the features I loved and grew accustomed to in the original Assistant. What are those features, you might wonder?

It fills the void in my heart, y'all!

Quite literally! Since the introduction of Gemini Live, the introverted side of me has never felt the need to ring a call to a "Friend". I just hop on to the Gemini live mode and talk my heart out. Since the Gemini 2.0 model update, it has a more human-like tone and responds more naturally, all thanks to "Advanced Conversational Intelligence."

I no longer need to sound like a robot while talking to it. Be it my idiotic questions phrased like a ten-year-old or anime recommendations I am looking for, Gemini AI understands all my babbling, even if it's not precise. I have a friend in Gemini!

Gemini AI is my unpaid assistant

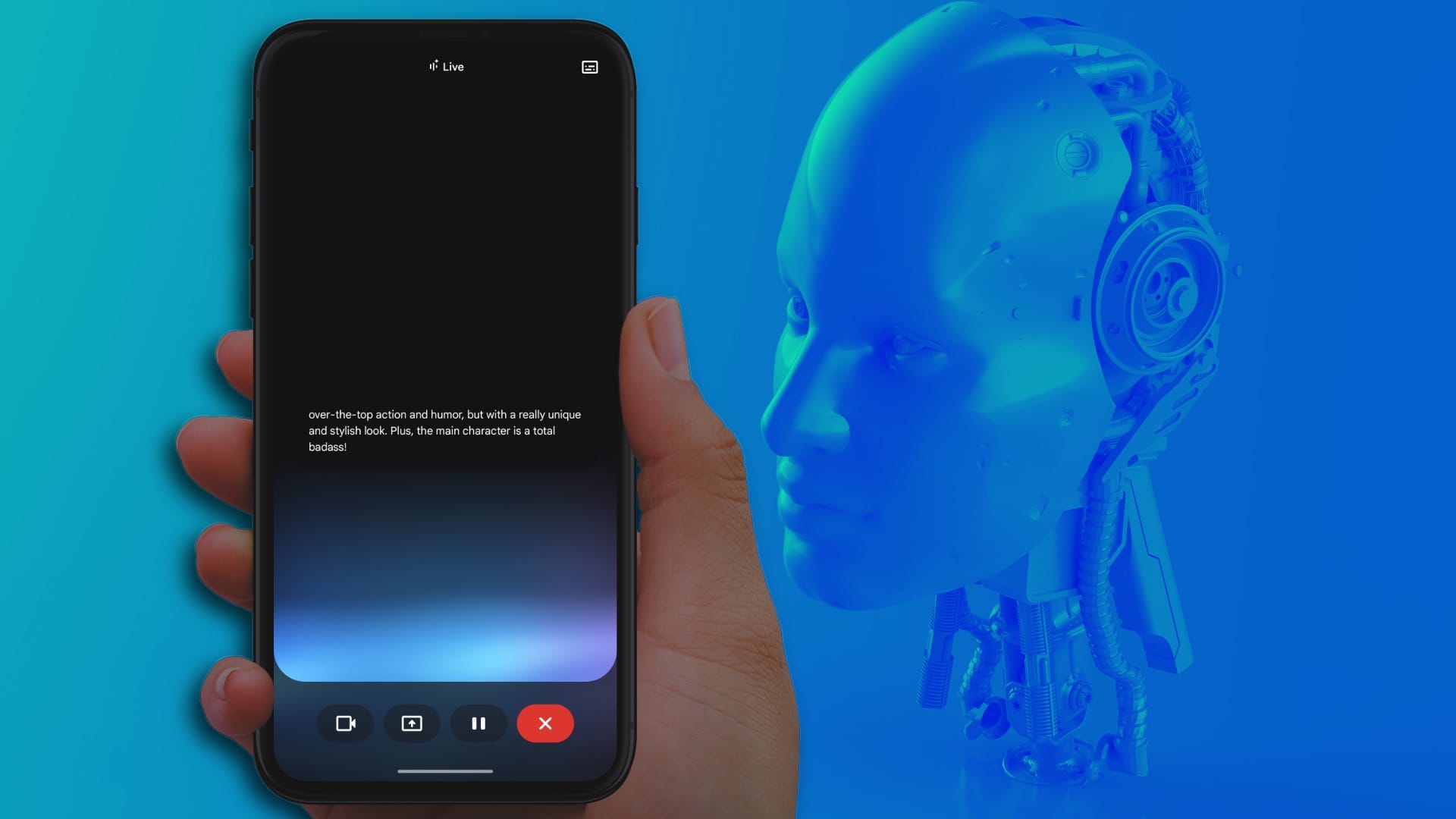

On top of being astoundingly introverted, I am also an avid sofa napper. So every time I am lying on my couch and my phone is beyond my arm's reach (on my coffee table for the most part), all I need to do is scream "Hey Google" and the genie called Gemini pops up on my screen.

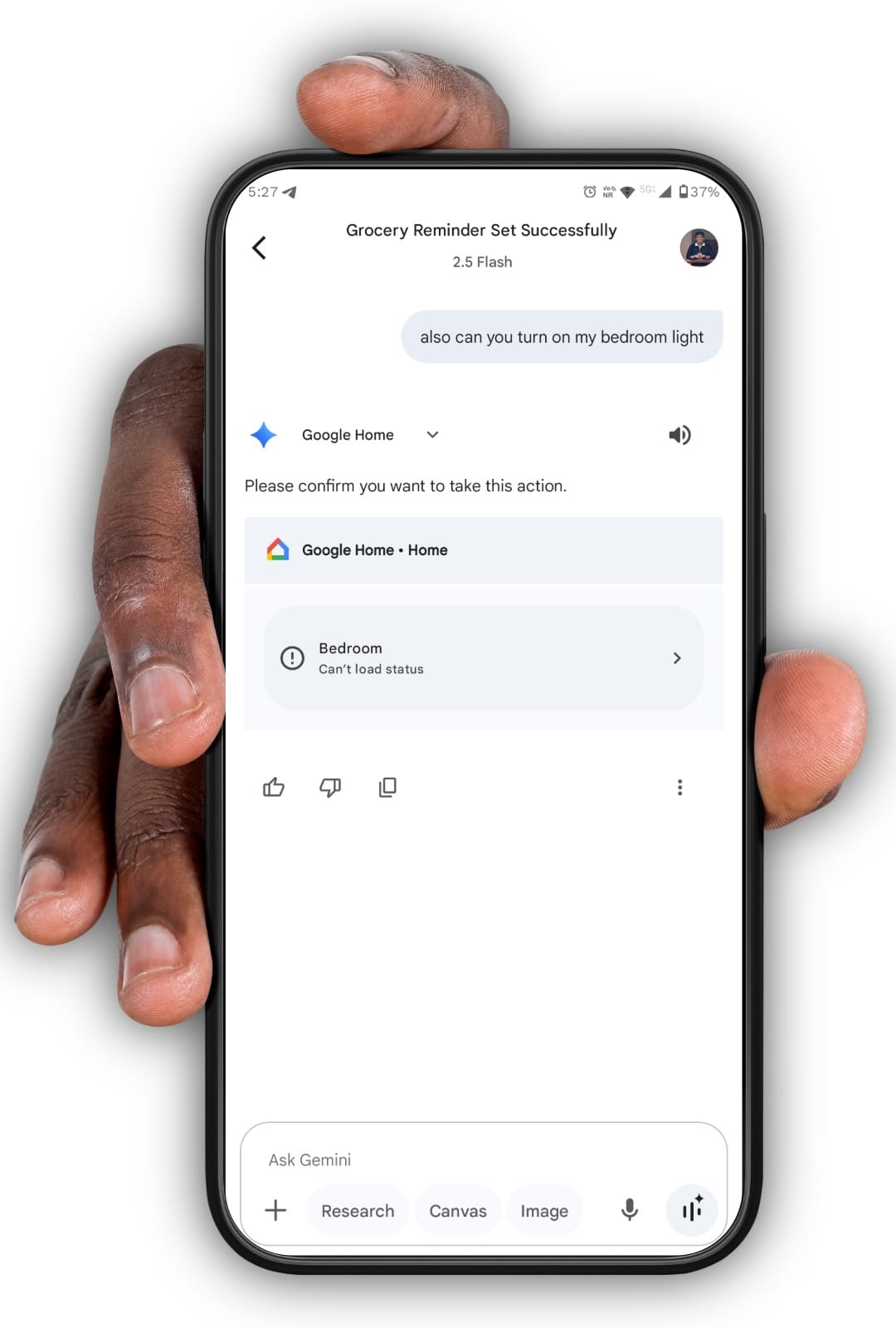

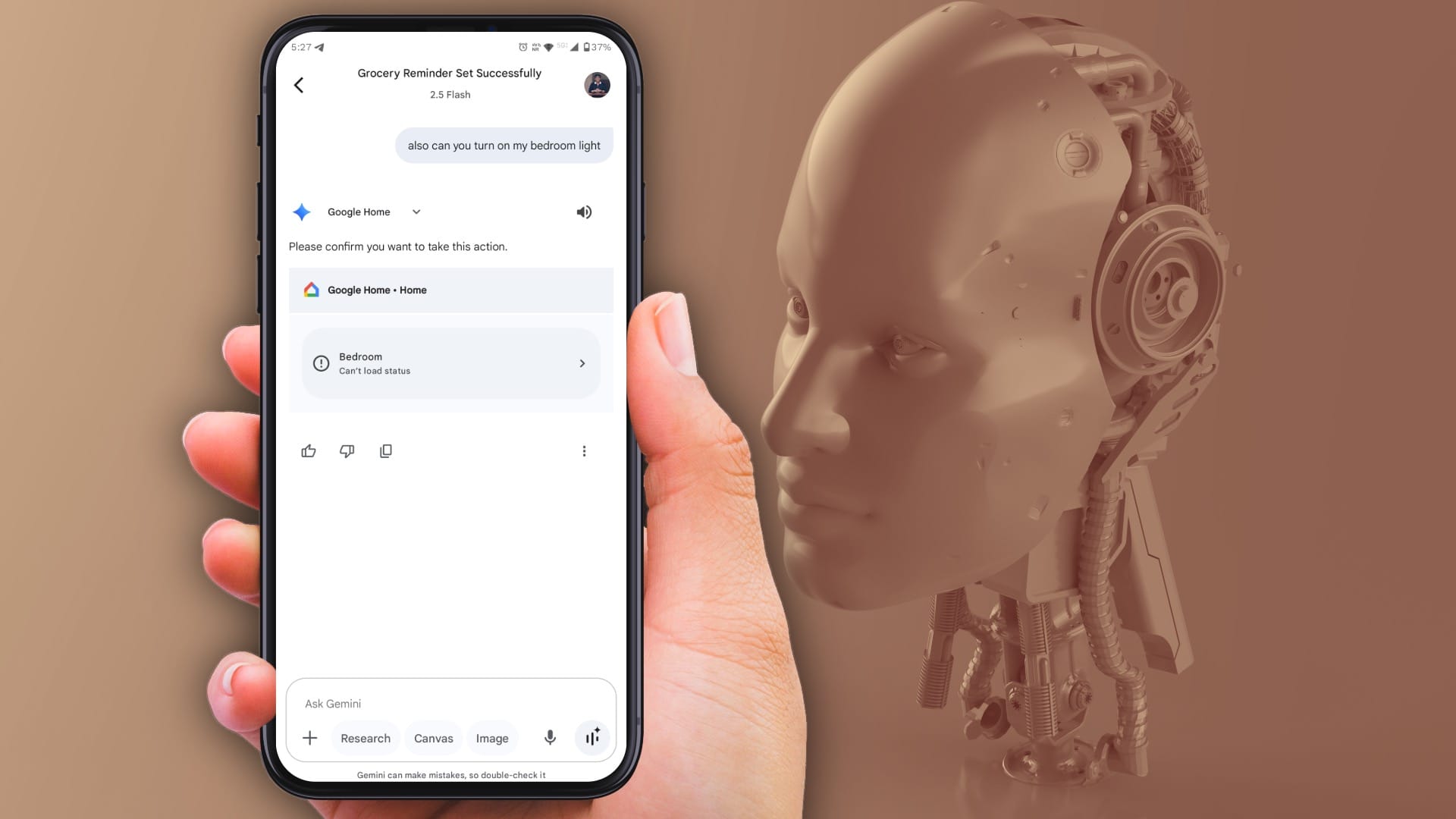

It helps me turn my TV, smart lights, and AC on. Since Gemini can interact with most first and third-party apps on my phone, it can also play, skip, rewind, and change songs for me.

But that is not all; I always end up forgetting things that mum tells me to do, such as laundry, vacuuming, getting the groceries, or turning off the bedroom lights. So I ask Gemini to take care of lights for me or set reminders that I can snooze later.

Gemini AI made my work easy

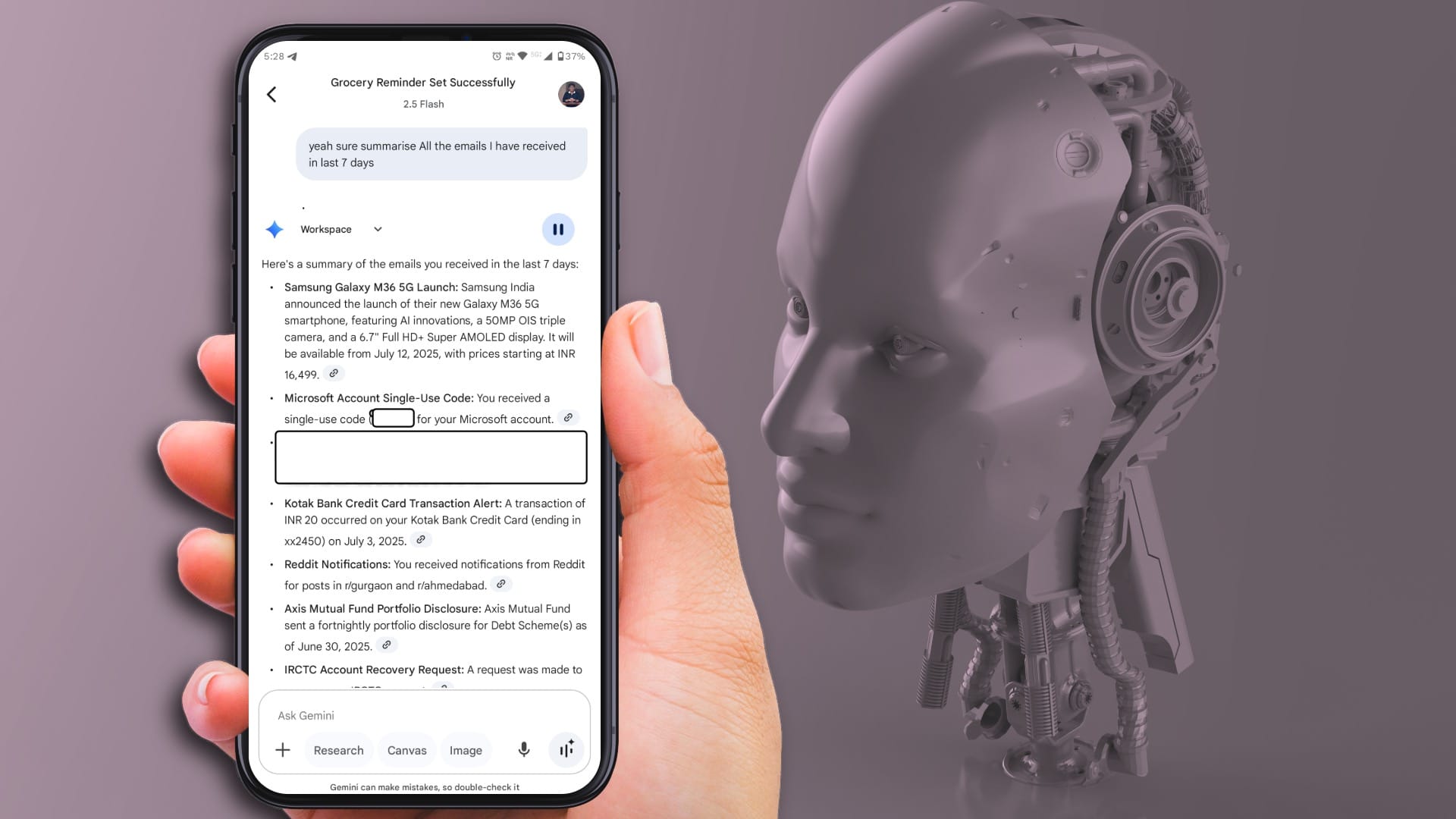

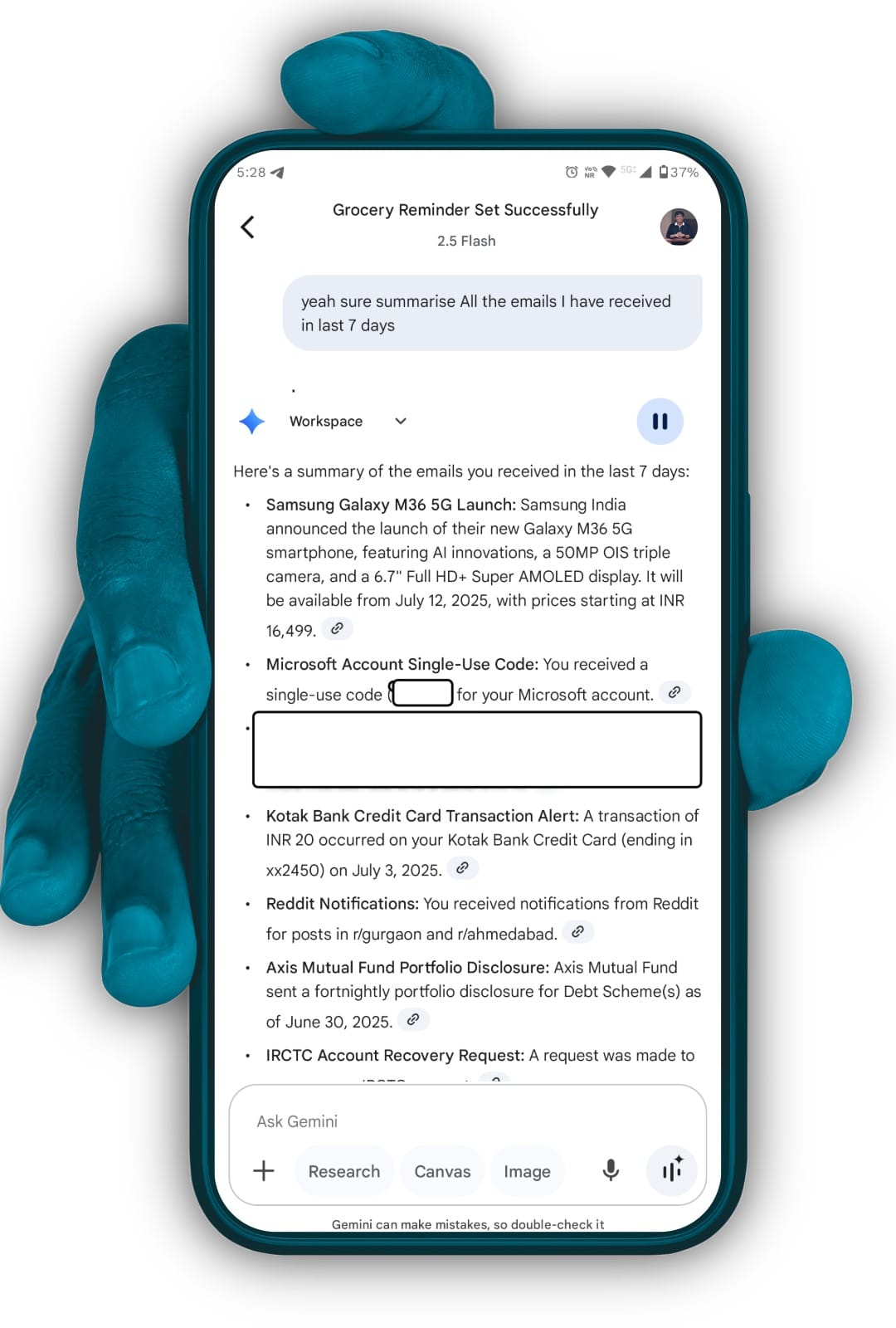

Lastly, Gemini AI's integration with Google Workspace streamlined my work. I get at least 50 emails during the week, and I am too lazy to go through all of them one by one.

So, at the end of the day, I ask Gemini to summarize my emails, and it turns the bland walls of text into quick bullet pointers and reads them to me like a lullaby. I even ask Gemini AI itself to respond on my behalf (when I can't respond politely).

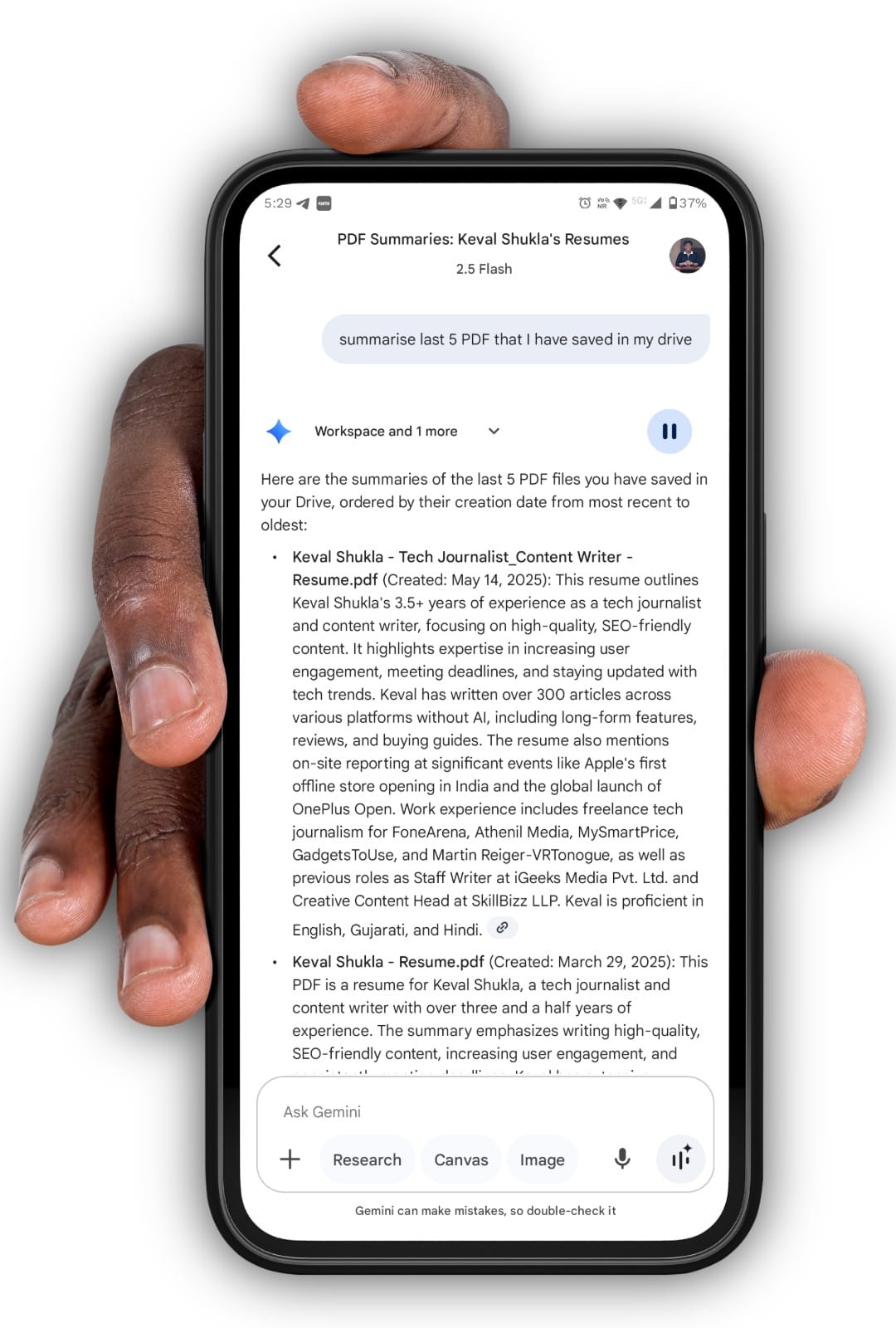

Gemini AI workspace integrations are not limited to emails. It can also nicely summarize PDFs, PPTs, and drive contents, make me look good in Google Meet, help me create slides for my last-minute submissions, and much more with just a simple prompt or a voice command.

Now that you know how Google's Gemini AI assistant is helping me every day to get through, here's why I believe it's better than the original Google Assistant and the features that it packs that will help you as well.

A heck lotta smarter than Google Assistant

Since Gemini AI has finally caught up to the functionality of the original Google Assistant. It can now make calls, send SMS, set alarms, and perform all the tasks the original Assistant handled. But, beyond that, its advanced AI capabilities make it more intelligent in certain aspects than its predecessor.

Previously, Google Assistant could only launch an app. In contrast, Gemini AI not only opens the app but also performs complex tasks within the app itself, which saves me a lot of time, effort, and energy.

But that's not everything that Gemini is capable of. There are three fundamental differences that make Gemini AI better than Google Assistant. Let's take a look:

Generative AI

The Gemini AI assistant can generate text, images (Imagen 4), and videos (Veo 3) based on user prompts. Its deep app integrations enable it to be able to summarize search results, long articles, SMS, and YouTube videos.

Moreover, Gemini's integration with Google Workspace allows for precise summarization of emails, Google Docs, Sheets, and Slides, delivering key takeaways and advanced research efficiently using generative AI, rather than merely fetching links from the web or apps.

Advanced Conversational Intelligence

Gemini AI is built with advanced conversational capabilities at its core, enabling users to engage in longer, complex, and nuanced conversations without needing precise phrasing.

Its human-like conversational abilities allow it to recall past interactions, respond to intricate follow-ups, and pinpoint requests without requiring users to repeat past information.

Additionally, Gemini's flash thinking capability paired with deeper app integrations with Google, third-party, and Google Workspace apps enable users to execute multistep queries across multiple apps seamlessly, without breaking them down into separate requests, just like having a conversation with an actual person.

Multimodal Interactions

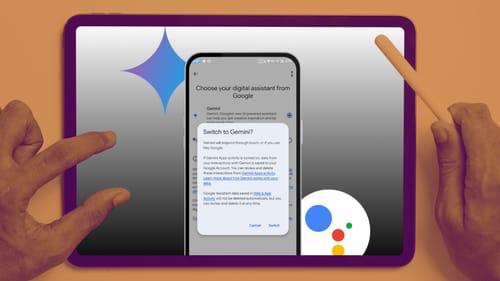

Google has now integrated camera capabilities with Gemini Live within the Gemini AI assistant, tapping into its multimodal capabilities to deliver a powerful experience using smartphone hardware in real time.

Users can upload images, share their live screen, or open the camera within Live mode and ask questions about the visible content, such as identifying a location, describing a place in an image, or providing a recipe.

On top of it, Gemini Live allows users to utilize their phone's camera to ask questions about the world around them. This functionality extends beyond images, enabling users to even summarize videos playing on their screens.

Gemini AI's future looks promising, but why?

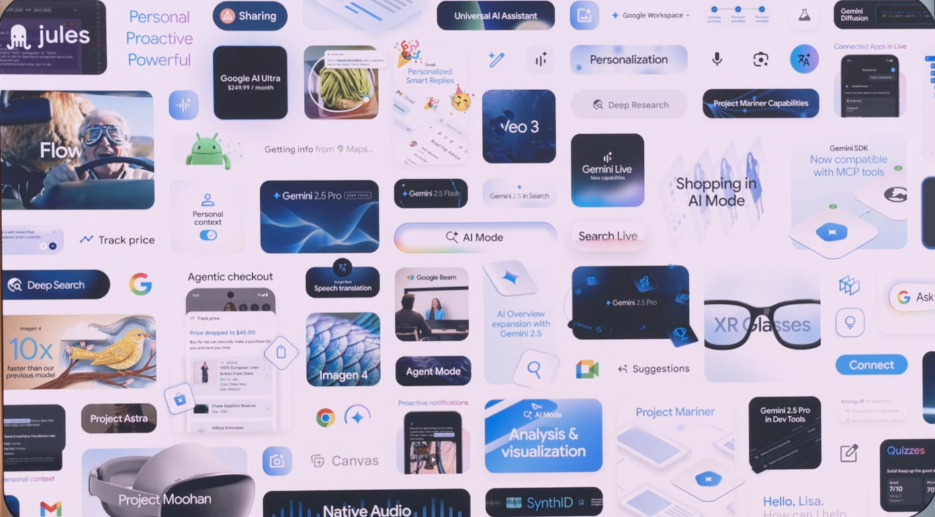

At Google I/O 2025, Google gave us a glimpse into the future of the Gemini AI assistant, outlining plans to expand its integration. This will be across platforms like Wear OS, Google TV OS, and Android Auto for a more seamless and personalized user experience.

All these platforms will get more personalized recommendations, better suggestions, natural language interactions, context-aware responses, smart home controls, and an enhanced user experience with the new Gemini AI assistant.

Google also unveiled several innovative platforms that will natively support the Gemini AI assistant, including Android XR (AR glasses), Google Beam (3D video communication platform), Project Mariner (Agentic AI for browser interactions), Flow (AI-powered filmmaking tool), Jules (Autonomous coding assistant), Chrome DevTools (AI-enhanced debugging assistant), and Firebase (AI Logic for app development).

These advancements underscore Google's commitment to embedding Gemini AI deeply within its ecosystem, enhancing functionality across devices and creative workflows. At the end of the day, it's all about giving a familiar AI experience across all the products one uses daily. From Docs to Maps, it's the same flavor of AI.

Signing Off

Google has a history of nuking projects as soon as they become stable, but this time around, with all the improvements Google has made so far, I am hopeful that the Gemini AI assistant is here to stay with a promising future. And given the current landscape shifting to AI shenanigans, this one is here to stay for the long race.

Have you shifted to Gemini AI assistant yet? If not, what's stopping you?

what's