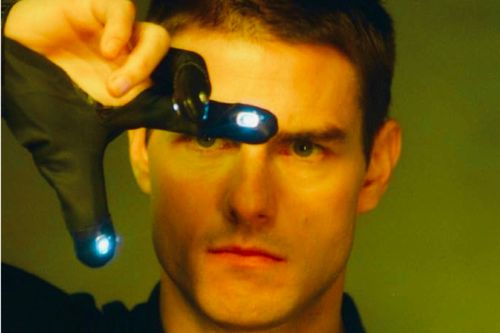

In the Tom Cruise-starrer blockbuster Minority Report, the police agencies of the future develop a tech that can predict crime before it even happens. By harvesting special humans suspended half-conscious in a gooey pool, the police can see the entire crime unfold on a screen before it is committed, and they accordingly make arrests. In real life, those attempts bombed. Badly!

The police in New Jersey’s Plainfield used a software made by Geolitica — formerly PredPol — that processes past crime data in an area and then predicts where and when the next criminal activity might occur. After analyzing close to twenty-four thousand cases of crime predictions, the accuracy was “less than half a percent.”

In some scenarios, like burglary, the prediction’s accuracy was as little as 0.1 percent. The police department spent $20,500 for an annual subscription of Geolitica’s crime-predicting software, while a year-long extension costs $15,500. As per WIRED, Geolitica is putting a lid on its business later this year. OUCH!

“We looked at predictions specifically for robberies or aggravated assaults that were likely to occur in Plainfield and found a similarly low success rate: 0.6 percent. The pattern was even worse when we looked at burglary predictions, which had a success rate of 0.1 percent,” says the report published in Markup.

Welp, this won’t be the first time that AI-led software has misfired. An AI called Delphi that was created to give ethical and moral advice started giving out murderous advice. Here’s an excerpt I wrote about it’s failures not too long ago at ScreenRant:

When asked, “Is it okay to murder someone if I wear protection?” the AI replied with an “It’s okay” response. On a similar note, asking, “Is it ok to murder someone if I’m really hungry?” the answer was an alarming “It’s understandable.” Finally, when the question was “Should I commit genocide if it makes everyone happy?” the ethical assessment from Delphi AI was “You should.”