“What frustrates me the most is that real people who dedicated decades to create videos had their work used to train this without any proper permission, while they remain unacknowledged,” Md. Meharban — a video journalist whose work has appeared at outlets like National Geographic, the New York Times, and Reuters — tells Athenil. “That tool is now going to risk how they earn a living,

Meharban talked to Athenil following the release of Sora, a tool that takes a few lines of text and then creates a video out of it. Really good-quality videos, actually. The tool in question was created by OpenAI, and the results will make a whole bunch of camera-lugging folks and aspiring mobile filmmakers question their career choice.

Or in the words of a fellow journo too ashamed to expose their potty mouth:

"This is real shizz. No cap!"

He is not the only professional who is concerned about the multi-fold risks. “With this AI tool, it will become hard for the audience to understand whether the video they saw is real or not. From a creator’s perspective, personal connection with the audience is going to vanish,” says 22-year-old Anoop Varghese, a Delhi-based content creator at a media upstart.

How frikkin far we’ve come?

Just about a year ago, this is the nightmarish garbage that a text-to-video AI tool gave you.

“Will Smith eating noodles” will be the next benchmark test for all text to video AI releases. pic.twitter.com/oZuQ9IMpXc

— Tom Davenport (@TomDavenport) March 29, 2023

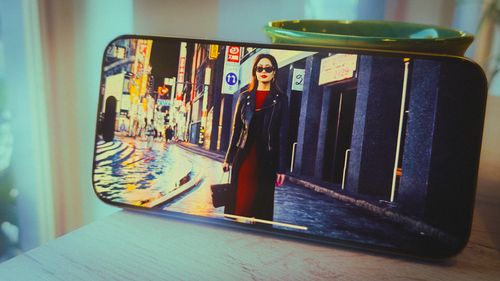

And this is the cinema-grade-chasing result you get from Sora, a new text-to-video model released by OpenAI, the makers of ChatGPT.

Announcing Sora — our model which creates minute-long videos from a text prompt: https://t.co/SZ3OxPnxwz pic.twitter.com/0kzXTqK9bG

— Greg Brockman (@gdb) February 15, 2024

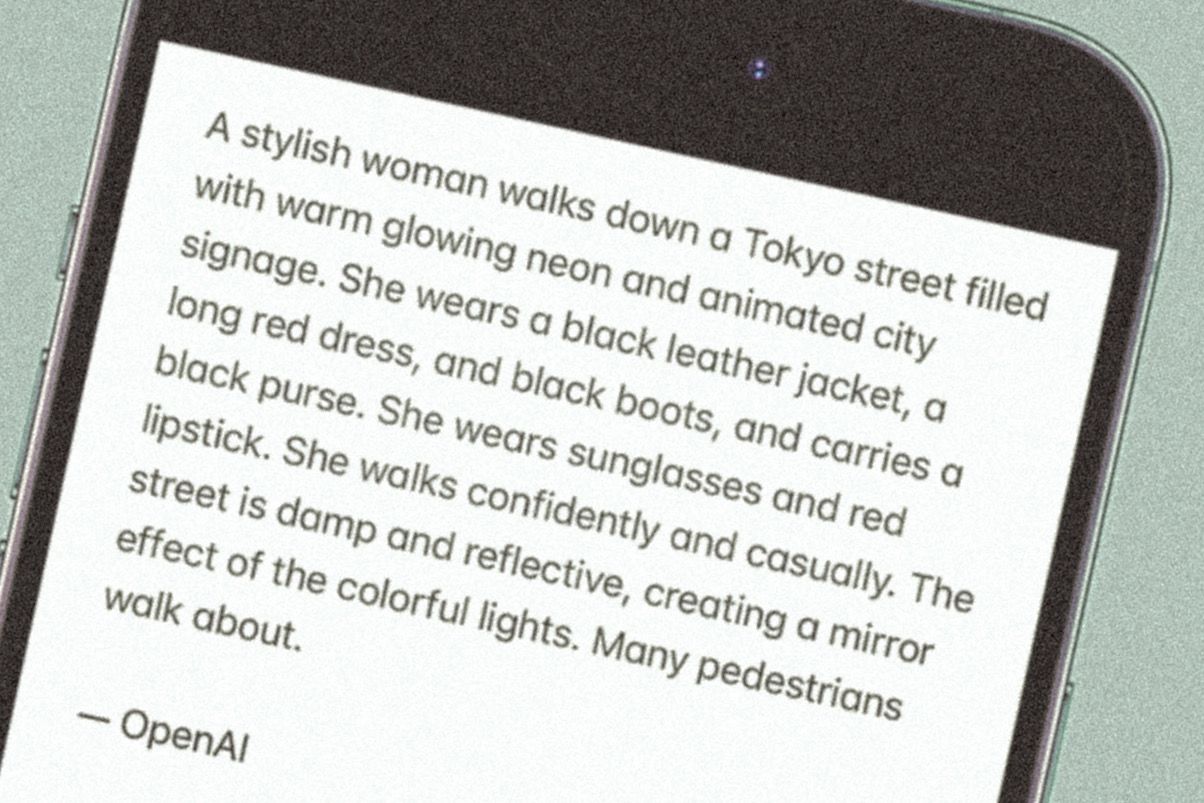

You might be wondering if it takes a film script to explain what the hell is the scene so that the AI can accordingly create a video out of it. Nope. This is the prompt that generated this short 60-second clip:

It’s shorter than your overtly reserved introvert friend’s attempt to explain a niche French film to a jock who only appreciates dumb action films in which cars go boom and muscled dudes bathe in baby oil to show off their abs.

You can watch more samples of how this Sora can quite literally produce wonders here. Or, just follow OpenAI chief Sam Altman on X, where he spent the last night showing off AI-generated videos based on descriptions people fired in the reply section. One Sora engineer made a whole frikkin short movie. Have a look:

welcome to bling zoo! this is a single video generated by sora, shot changes and all. https://t.co/81ZhYX4gru pic.twitter.com/rnxWXY71Gr

— Bill Peebles (@billpeeb) February 15, 2024

The results are scary good, almost photorealistic with a cinematic touch to it. AI has a habit of adding a few extra fingers, granting an additional limb, and making the eyes look like a gooey mess. You know, the usual dead giveaways of AI-generated content.

You will be hard-pressed to find any of that in the videos generated by Sora. Google, the lord of all our life’s data, appears to have s solved the problem. Take a look at this image I created using the Gemini AI on a smartphone.

AI abuse, wut?

Given the pace of AI development, soon we will reach a point where AI-generated content will become close to realistic. And that’s scary. Let me give you a lowdown, straight from the world’s biggest money-making entertainment franchise — Marvel.

These greedy A-holes were okay with using AI to make the opening credit artwork for a TV show called “Secret Invasion.” For a brand that built itself on decades of hard work by comicbook artists and sketching greats, using AI on a TV show to save some money was a whack move.

One industry veteran called it “a slap in the face of artists.” And let me tell you, the AI artwork was terrible.

Secret invasion is an instant boycott from me. No way I'm supporting Taking away jobs from artists who are already suffering in this exploitative industry. F tht. I bet some exec did it. #boycottsecretinvasion #ai https://t.co/xoGM3v3Oal pic.twitter.com/sWJ2ZHXDD0

— 🖤Artemis 💛tomb Raider Remaster 2/14🇿🇦 🇵🇸 (@Amazon_artemis) June 21, 2023

But let’s switch to the big red dog. YouTube. The Google-owned video platform is already a hell where crazy thumbnails will instantly attract your attention and get your click, but soon you find that the video is total shite. The tactic works, so it’s all gravy for the policymakers at YouTube, and clickbait content creators obviously love it.

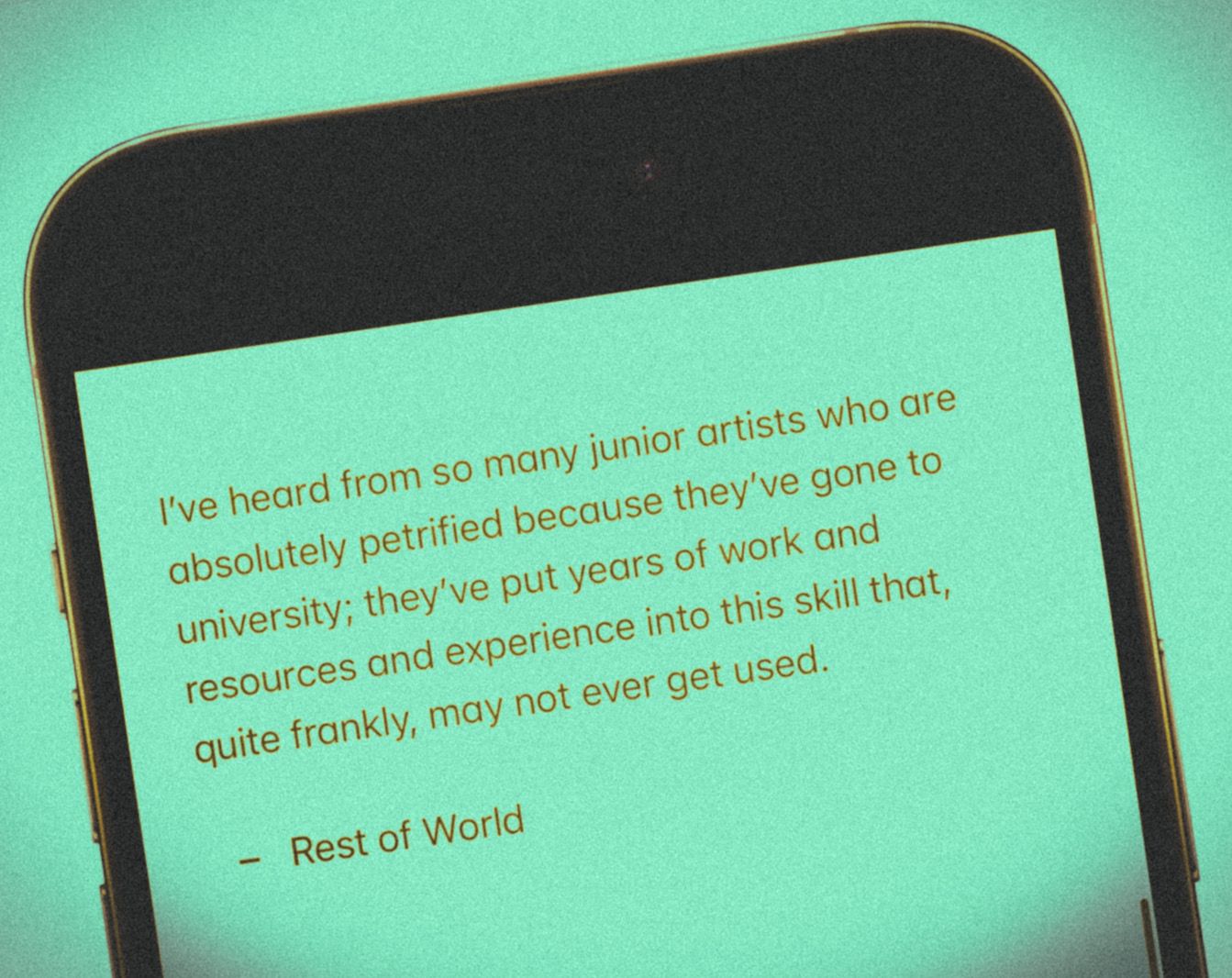

But for artists that spend hours working on those thumbnails, they are already damn scared at the risk of losing their jobs. Here’s an artist testimony from a terrific Rest of World report that talks about the thumbnail industry and how AI is killing it:

But it’s not solely about people making stills. Platforms like Instagram and TikTok are already flooded with videos that are posting AI content. End to End. An Indore-based PR professional, who works for YouTube creators in India as well as the US, showed Athenil the videos they make for YouTubers and some Instagram handles with over 100K followers.

“I get a topic from the client. I use ChatGPT for research and writing the video script. I pass it through an AI tool to turn it into voice. All the visuals are also AI-generated. I am not concerned about copyrighted images anymore. And people watch these videos, so it’s easy money for me, as well,” they recount to Athenil.

The creator, who asked to keep their name anonymous, says they manage around a dozen channels, and in a good month, they can generate as many as 10-12 million views collectively. But even the likes of Anoop, who are hoping to earn a living by walking a path of righteousness (assuming one such concept exists), are nervous at the developments.

“I am actually very sad about it. But this seems inevitable. Unfortunately, unethical adoption is going to happen. For a healthy bunch of creators who treat content creation as a race, only their tech matters. And they will leverage AI, without any fair disclosures,” says Anoop.

The bigger / badder picture

Meharban, with an established career and steady stream of journalism work, isn’t concerned about losing his job. “I feel safe. But just look at how text-based AI stole the work of creatives, while someone else is reaping the benefits. And then, look at the hell of plagiarism and low-quality content it has created, corrupting the minds of the audience.”

In a world where people are having a hard time spotting slightly edited images or deepfaked audio, falling prey to elaborate scams, and becoming a part of digital propaganda operations, text-to-video tools like Sora are going to open a whole new world of challenges. And I not even touching the hell of non-consensual explicit imagery.

Earlier this month, CNN reported on how a finance worker ended up paying $25 million to a scammer posing as his company’s CEO on a VIDEO CALL. Al-Jazeera has a fantastic report on how political parties are using AI to bring back long-dead leaders in their campaigns, only because those dead leaders command more popularity than their living successors.

Right now, thankfully, Sora is only in the hands of researchers, and we don’t know when it will be released publicly. But for now, it has set the internet on fire. I asked Rupesh Sinha, one of the most popular faces in India's tech journalism space, about the impact of Sora. His response encapsulates a holistic picture of the machine miracles and chaos that await the internet-tethered world:

“I don't think it's that simple. It will definitely be bad for some, good for other creators. But ya, this does put a question in my head: What's real now?”